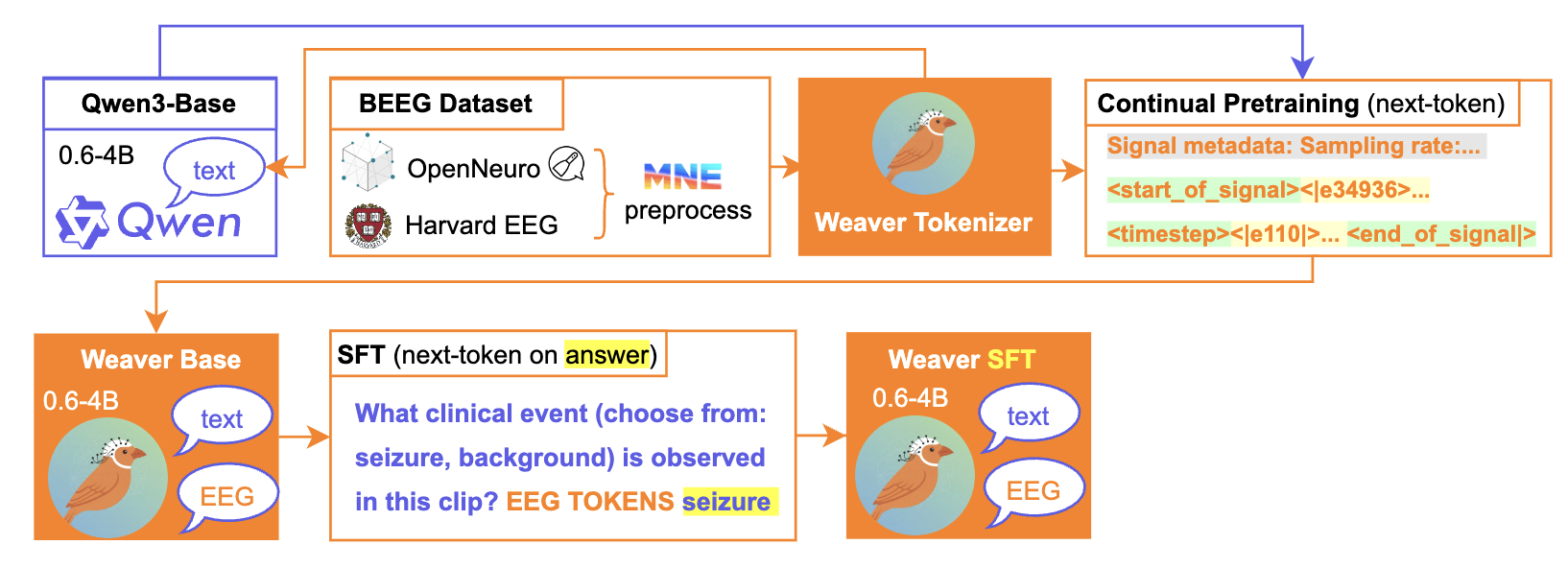

Dissecting EEG-Language Models: Token Granularity, Model Size, and Cross-Site Generalization

We investigate how token granularity and model size affect EEG-language model performance in both in-distribution and cross-site scenarios, and find that token granularity is a critical, task-dependent scaling dimension for clinical EEG models, sometimes more important than model size.